An AI Adventure

May 9, 2015

This week I spent a lot of time thinking about AI, which is essential for replicating the experience of asymmetrical multiplayer game in single player. I feel it is important for the game to succeed in single player first, as a standalone experience or to get the player familiar and comfortable with the game for an eventual foray into multiplayer. It also allows me to upload a portion of my consciousness to the internet to live on forever in robotic form and win countless games for me…

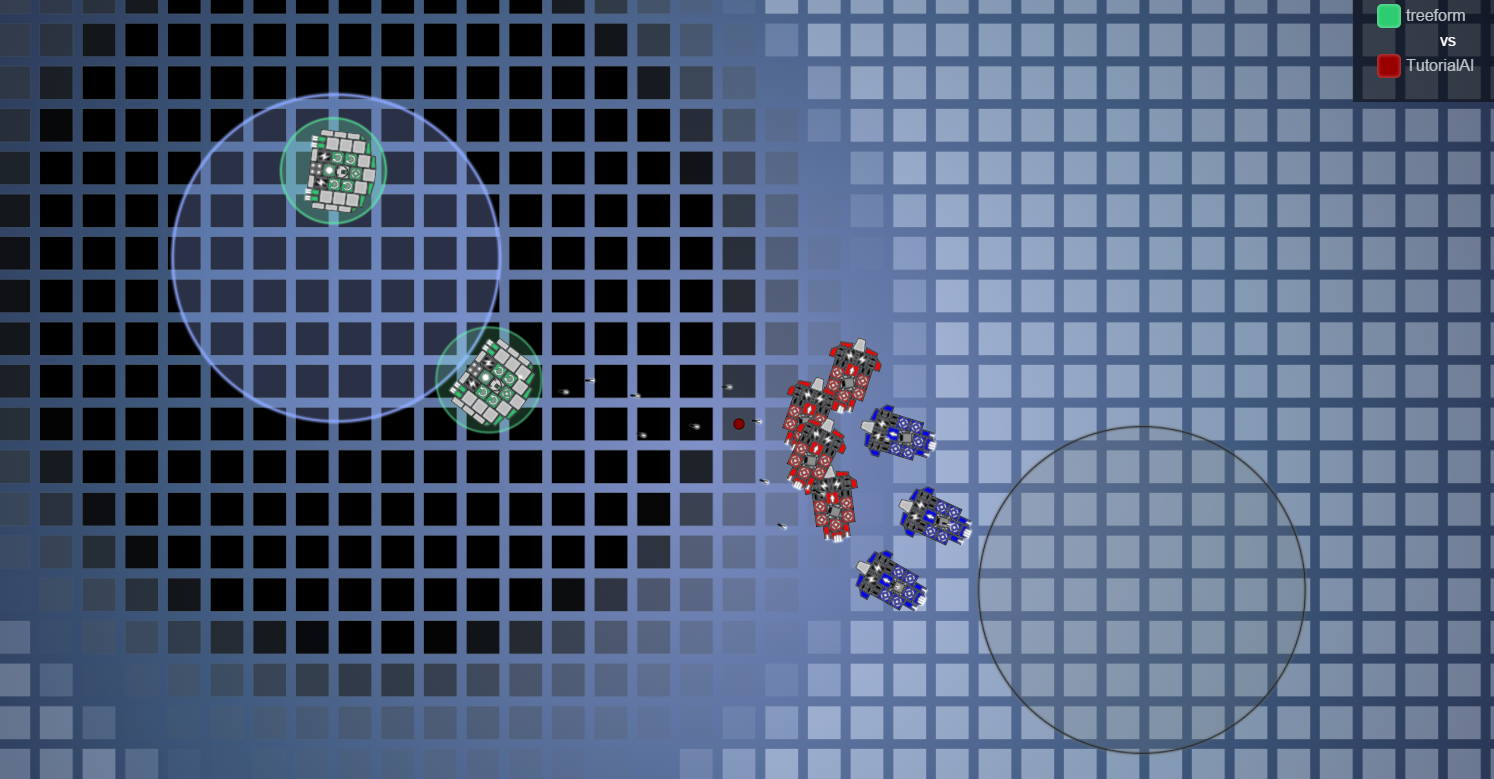

First thing I did to improve the AI is introduce potential damage fields. Each unit in the game produces a field around them in the form of potential damage it can do. AI's unit create a positive field, the human player a negative one. AI units try avoid negative damage fields on the map until their own positive fields cancel it out, because this represents that they will deal less damage than the enemy if they engage them there. Here is a view of it the game:

This is when I realized that it is important for the AI to know when a unit counters another. This is probably the most important thing for determining the success of an engagement. I am trying to come up with a system were each unit the player builds, the AI is aware of and builds a unit that can adequately counter it. This poses a particular challenge for this game, because player units are built out of parts, the player can build a unit that AI never seen before and does not know what to do with. I have prototyped a system were it runs a mini simulation - a game with in a game - where the players unit is tested against the units the AI has available to them so that it can try to find the best one. Sometimes however, none of it's ships are capable of countering that unit at the same cost . A truly challenging AI might need to have access to as wide an array of units as possible, maybe even every unit ever built. The other limitation of this system is that it simulates the players actions as if they play like the AI does. This leads to two scenarios, the first is that the player is not actually capable of executing as well as the AI does (which, if we program in perfect kiting and projectile dodging, the player might not actually be able to perform at that level), and the AI misses a counter that would have worked against the player. In the second more likely scenario, the AI fails to control the unit as well as the player would and the AI picks a weaker unit which it only thinks is strong against the other unit because it is, itself, too stupid to use that unit correctly.

While researching this I was surprised to find that there is a huge divide between the academic AI that is submitted to a StarCraft AI competition and the actual "AI" that ships with StarCraft and other RTS. The "AI" that ships with most RTS's barely deserves the name, as it is nothing more then a bunch of scripts and prerecorded actions using a finite-state machine. It is not a complex learning AI, nor is it even based on a programmatic understanding of the game mechanics, rather it simply follow a set of rules and triggers that fool the player just enough into thinking it is smart. Coming up with a scripted AI for Istrolid is made slightly more difficult again because there are no strict unit classes or roles, they emerge as a result of the players designs. While we could design in a set of parameters it applies to ships to figure out their roles, counters, and functions, the players might come up with a completely different kind of ship that serves a totally new purpose. On the whole an AI based on scripts, triggers, and rules feels cheap, but some hard coded knowledge of how to react in the game is probably required. My solution will likely be some hybrid of several kinds of AI.

A bunch of AI games were played so that was cool.